Artificial intelligence has made some incredible strides lately. Self-driving cars, talking virtual assistants, interconnected appliances and humanoid robots are no longer the stuff of sci-fi, but are becoming part of the human landscape. Researchers are excited because the machines can now teach themselves, so they are turning them loose to let them learn on their own.

The question no-one is asking is: should they? What will happen when machines become so smart that they make their own decisions about the world and their role in it relative to us? Dare we trust that luck and good intentions will see us through?

Major problems remain to be worked out before freely welcoming intelligent machines into our homes. Security, for instance, always important in an interconnected world, is even more important when it comes to smart machines. The more intelligent a device is, the more it can do for us, which means the worse things it can do if it misbehaves. And at this stage of the game, the machines don’t even know what “misbehaves” means.

Researchers have already found “grave defects” in several kinds of humanoid robots. Hackers could take over these by traditional means – Universal Robots’ industrial robot arms can be hijacked and made to flail about with enough power to shatter the bones of co-workers unfortunately in the way.

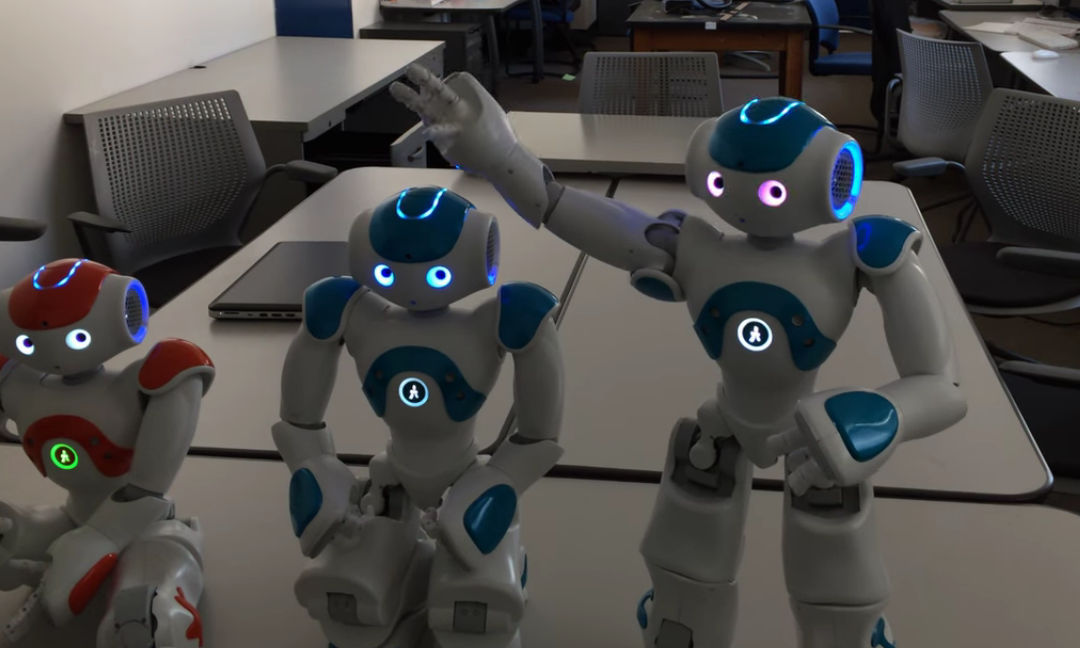

Even small companion robots, like the toylike NAO, were rushed into production, and thus lack basic online security. These cute little bots could also be vulnerable to hijacking, privacy, invasion and data theft .

It’s not just dedicated criminals who may willfully pervert the uses of intelligent systems. The Turing Test was proposed back in 1950 as a means of determining if machines could think. The idea was that if a person could not tell if the responder on the other end of an online conversation was human or mechanical, it didn’t matter: whatever it was, it could think sufficiently like us.

A robot named Eugene passed the test back in 2014, so naturally, the rules were changed to make it harder. (Machines may be as smart as we are, but we’re still trickier. Plus, we cheat a lot.) But the method made using language processing computing an attractive way of not just testing artificial intelligence, but employing it to interact with humans in the real world. Siri and Alexa are just the first fruits of this approach.

Chatbot programs were soon developed, but not all were smart. Many early ones were quite simple, and just scanned for matching or connected words in a database. This did not prevent some unexpected results. One early conversational program called ELIZA echoed statements back in question form. To the surprise of scientists, people related to this as if it were a real human being they were talking to who was interested in them. So they often confided information they would normally never tell a soul.

Recent experiments have indicated part of the assumption was because these trials all text-based. When researchers had humans read the output aloud, hearers often had a sense that it was a scripted reply, but still could not easily determine that the source was a machine.

The situation got more disturbing as machine intelligence grew to where the robots could learn from conversations. Twice, Microsoft introduced a chatbot to respond to online questioners but quickly had to pull the plug both times. The first one, called Tay, designed to respond as a teenaged girl, was turned into a filthy, foul-mouthed fascist within a day by users, simply for their own amusement.

The second time, with Zo, using the same algorithms, which was dedicated to talking up Windows 10 soon did not want to anymore, calling it “spyware”. It also took to defaming the Quran, and so was also shut down.

The problem is not unique. Two Chinese chatbots, one of which was created by Microsoft, also had to be shut down when they gave unsatisfactory answers to questions about the glorious Chinese Communist Party. One might shrug and say that’s what happens when trolls can access tender young programs. But even more disturbing was what happened when two chatbots talked to each other with no human involvement. They had to be shut down once they invented their own language which researchers could not interpret.

In a recent newsletter article, we mentioned that visual systems were also subject to strange anomalous behaviors. Scientists have found a number of visual patterns, some so abstract they resemble noise, that self-taught visual identification programs misidentified.

The problem is that scientists have bestowed the power to learn upon machines, but how they teach themselves is entirely up to them. Understanding of how computers do that is critical, for machines have already demonstrated the capacity for self-awareness. It was done with those innocent little NAO robots, the very moment caught in the picture above, but if even they can be given something resembling consciousness, what about their more gifted and powerful siblings?

In the case of visual systems, this uncertainty means that a sticker placed upon a stop sign might cause a self-driving car to fail to recognize it. Or that it will mistake a red balloon as a stop sign. Either way, human lives could be severely impacted, perhaps lethally.

Obviously, we can’t just turn these devices loose and hope for the best. They will have to be educated somehow, trained to see the world and react to it as we want them to react. Yet we are already allowing them into our most intimate spaces, in an uncontrolled marketplace experiment whose results cannot yet be foreseen.

When human children are not taught properly, when their development has not been carefully nurtured and monitored, they become feral, a danger to their community. Just as we cannot afford to permit that to happen to the next generation of people neither can we for the next generation of machines.