Our relationships with robots will be as complicated as our relationships with any beings possessing a certain amount of intelligence and free will. Like cats or small children, perhaps, though it is sincerely to be hoped that the machines will be far more obedient and predictable than either of those. But to serve us well, the robots are going to have to learn about us. They’re going to have to understand how we react and what our various emotional displays mean. This is not only happening in lab studies, but emotional detection by AI is already being put to work.

But will robots, like kitties and kids, be smart enough to manipulate our emotions? For babies, this comes quite naturally, as it is literally a case of life or death – an infant must secure the love of its parents to survive. So it instinctively knows how to smile and coo, when to cry, and when to latch on to its mother. Cats have to learn, but after living with a person for a bit, as any cat owner can tell you, they can judge a person’s mood as precisely as they know the person’s reach. Countless videos on YouTube demonstrate how cats are true masters of tricks to gain affection.

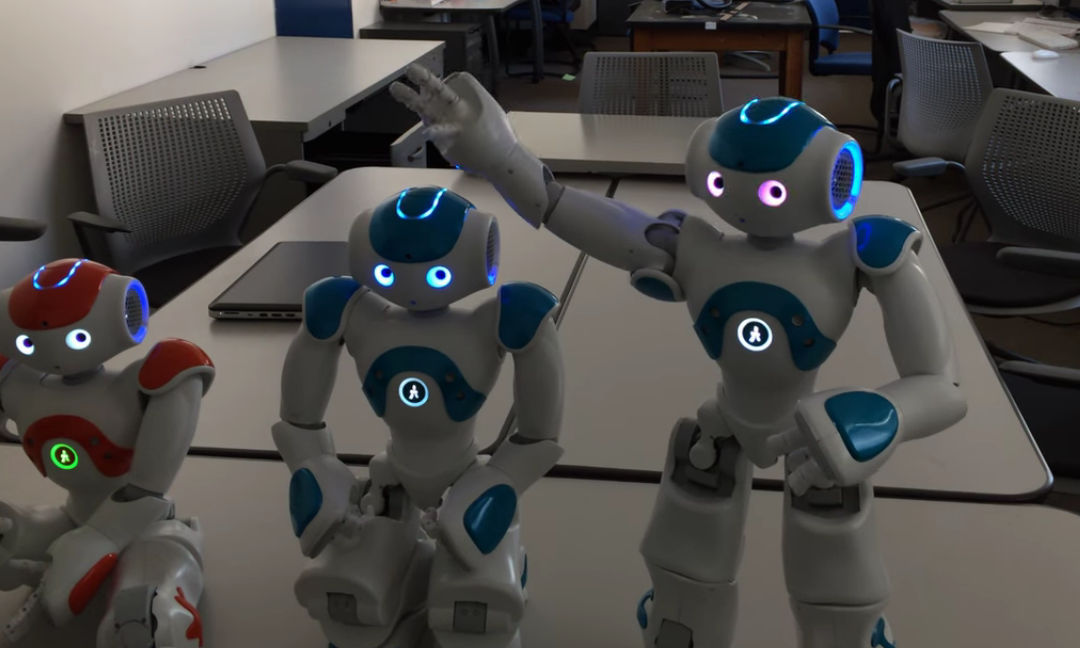

So along with all the other potential threats and promises of artificial intelligence comes that of emotional manipulation by machines. This may one day range from fake news designed to pull at the heartstrings to intimidating robot security guards to seductive sexbots. But far subtler uses may happen, and they’re already being demonstrated with cute little humanoid robots called NAO, the same kind that demonstrated self-consciousness.

In one experiment, robots exhibited different ways of relating to the subjects. Some were polite to the users, some were friendly, some less so. The subjects were told the robots could be turned off when the test was done. Some robots did not react at that, but others robots protested. Some bots said they were afraid of the dark, others pleaded outright for their lives, as if being turned off were death.

Many of the human subjects exposed to the pleas refused outright to “kill” the little machines, while the rest took a while to decide. Some said later they felt sorry for the bots, others were simply curious to see what would happen next. But the interesting thing is that the unfriendly robots who protested were the ones who most often avoided shutdown. Apparently, the more robots act like a person with emotions, the more humans tend to treat them that way.

Another experiment showed that while adult humans tend to be resistant to robotic influence, children are far less so. It worked like this: a group of three robots and another of three humans look at a screen along with the subject to judge the distance between vertical lines. As expected, adults conformed to the unanimous opinions of other adults even when they were obviously quite wrong.The children (from 7-9 years old) did not have a group of adults (as tests already show how vulnerable they are) but just the bots. Yet kids subjected to the same sort of peer pressure by the little robots readily succumbed to it.

The implications could be profound, particularly since today’s children will grow up in a world where robots and AI will surround them. Think of the potential implications for advertising, or what hackers could do, or simply designers with unconscious biases and prejudices. Perhaps we should shield the little ones from interacting with AI as much as we have to protect them from the worst content on the internet until some sort of safety standard is in place.

Killer robots may not be our ultimate worry, after all; perhaps robotic ad spokesmen may be. We may need the Three Laws of Robotics just to protect ourselves from our own creations being used against us as salesmen.